-

Slashcam News : Autodesk Smoke 2013 For Mac

Tools and technical news about digital cinema's workflow, DSLRs and more. Follow Digital Cinema Tools on Twitter @tierible. Filtered by GoPro Hero 3. During this hour-long in-depth session, Stuart will look at some of the techniques WTHR employed making this ident -- from editing to compositing, from fixing to finishing -- a little of. Autodesk has begun shipping the Autodesk Smoke 2013 professional video editing software. Smoke 2013 offers editing and effects within a single, efficient timeline-based workflow and a sleek user interface. The software runs on a wide variety of Apple MacBook Pro and Apple iMac computers. Tools and technical news about digital cinema's workflow, DSLRs and more. Follow Digital Cinema Tools on Twitter @tierible. Filtered by The Ticket.

(Fremont, California-May 31, 2013) Blackmagic Design announced today that DeckLink, UltraStudio Thunderbolt and Intensity products will be compatible with Smoke 2013, with the release of Extension 1 by Autodesk. Smoke 2013, Extension 1, is available now to Smoke subscription users. Blackmagic Design’s DeckLink, UltraStudio and Intensity lines include the world’s highest performance capture and playback cards for Mac, Windows and Linux.

Including both PCIe and Thunderbolt based technology, these products come in both internal and external models and are used by professionals globally in every aspect of film and video production. “In the post world, customers want the ability to mix and match the applications and hardware that work best for them. Now with the compatibility between our desktop video products and Smoke, we are creating a seamless, fluid workflow, enabling our customers to work with the leading compositing and finishing software tools available today,” said Grant Petty, CEO, Blackmagic Design. The ongoing collaboration between Blackmagic Design and Autodesk will include the testing and development of integrated workflows between Autodesk technology and Blackmagic Design’s PCIe and Thunderbolt based desktop video capture and playback products, including DeckLink, UltraStudio and Intensity product lines. About Blackmagic Design Blackmagic Design creates the world’s highest quality video editing products, digital film cameras, color correctors, video converters, video monitoring, routers, live production switchers, disk recorders, waveform monitors and film restoration software for the feature film, post production and television broadcast industries. Blackmagic Design’s DeckLink capture cards launched a revolution in quality and affordability, while the company’s Emmy™ award winning DaVinci color correction products have dominated the television and film industry since 1984. Blackmagic Design continues ground breaking innovations including stereoscopic 3D and 4K workflows.

Founded by world leading post production editors and engineers, Blackmagic Design has offices in the USA, United Kingdom, Japan, Singapore, and Australia. For more information, please check. About Autodesk Autodesk helps people imagine, design and create a better world. Everyone—from design professionals, engineers and architects to digital artists, students and hobbyists—uses Autodesk software to unlock their creativity and solve important challenges.

For more information visit autodesk.com or follow @autodesk. Autodesk and Smoke are registered trademarks or trademarks of Autodesk, Inc., and/or its subsidiaries and/or affiliates in the USA and/or other countries.

Thanks for the info, have really to look into it. Nice to have also other people who can contribute something, infos or code. Hopefully it will get more frequent. At the moment i'm trying to get the basic things done, like plugins and OpenGL preview. It's crawling a little bit atm, as i also have to get my regular job done. Also i'm trying to get LibRaw again in to the code this weekend, I removed parts after testing for Model-View-Presenter rework.

Ffmpeg was always really good. I already used it in another project (train simulator with real videos) and the performance was much better than reading directories full of individual image files (frames). Maybe me or someone else can make a plugin/module out of it. At the moment the application still lacks plugin manager, but first steps first. No problem, I am not really a coder, but really want to push this. Having no floss tool out there which handles cinemaDNG sequences properly and not being a photo app with a photo workflow is great, and I will try to convince others. Ever since magiclantern appeared, or the kickstarted digitalbolex, I hoped for such an effort to rise.

Nov 26, 2009 0x95981002 is Ati Radeon HD 3650. 0x95911002 is ATI MOBILITY Radeon HD 3650 I have to reinstall osx on my computer and try to extract the pkg for the 3650 from the apple update: Macbook update. Maybe we'll see something great and mobility working!!!!! Ati radeon 3650 Related: ati radeon drivers download utility, ati radeon hd 4800, ati atombios, ati mobility radeon x700 Filter. Ati 3650 hd drivers for mac. Nov 24, 2018 Can be ATI Radeon HD 3650 work in MacPro 1.1? In my Mac Pro not work and I don't know, where is problem - 1.1 isn't support? Drivers not exist?.

First steps first. Tell me what you need, and I will try to help. As I see you investigate in MXF-wrapped dng, it would be very helpful if ffmpeg could support this first., maybe just add a feature request and see what's happening.

Slashcam News Autodesk Smoke 2013 For Mac Free Download

I also think using openimageio would be a good idea, has raw support, and deeply features all the other file formats of interest, with strong support from the vfx industies, and really nice library usage. As a side-point, you could look into or maybe the one or another idea could rise up from there (ofx plugin support;-). You seem to be a little bit more experienced with this stuff and could do a little investigation about raw libs if you want. I'm a little bit rusted as my daily job has to do with MFC, data structures and large amount of ugly code (medical devices), not really graphics (besides WPF GUI sometimes), but nevertheless i have enough experience with FFmpeg, OpenGL etc. To get many things done. My latest test was using LibRaw and loaded al the frames, after decoding of course, into RAM.

Memory usage is 1:1, no optimizations, no partial loading to VRAM etc. But it played really nice and fast. At the moment i'm doing quick prototype of OpenGL preview pane to get some shaders into the play. I would really like to don't hassle with raw loading and let the libs do the work. Afterwards de-bayering, color grading, white balancing etc. Getting done with shaders (fragment or compute shader). I am by no means an expert about this.

Farrago soundboard app for mac mac. I just happen to be interested in these technologies, and see what could be connected to get things forward. Could do a little investigation about raw libs if you want. Despite from possible license-issues, these come in my mind at first: openimageIO ('3-clause BSD' license) does also use libraw, has deep support for exr, dpx, JPG-2000 for creating DCP's for cinema projectors. And as it gets used inside the programs and pipelines of the big vfx studios, it's constantly developed and quite future-proof, also said to have a very fine API.

The OpenFX plugin benefit would be to be able to use, apart from even commercial ones, many floss ones in one snap: Color correction: Color Transform Language (CTL), OpenColorIO LUT, Color Transfer. Filtering: Blur, Sobel, Non-Local Means Denoising. Geometric distorsion: Crop, Resize, Flip, Flop, Pinning, Lens Distort. This could also be interesting: Tuttle Host Library - A C library to load OpenFX plugins and manipulate a graph of OpenFX nodes. So you can use it as a high level image processing library. OpenColorIO is also a great thing to have (think of ACES, color space conversions), Tuttle features it, one abstraction layer above.

And as OpenFX is already built into node-based compositing solution natron (mpl license), maybe it's a good idea to ask at natron team, they have a tremendously fast dev pace right now, actually get paid for programing floss, they also use qt and c. As for Bayer demosaicing, and having the most (best) choice in doing it, there is actually rawtherapee which has to be mentioned: AMaZE, IGV, LLMSE, EAHD, HPHD, VNG4, DCB, AHD, fast and bilinear algorithms. RL Deconvolution sharpening tool sounds also nice: Then there is RawSpeed (lgpl) library: RawSpeed is not intended to be a complete RAW file display library, but only act as the first stage decoding, delivering the RAW data to your application. And that's only the stuff I know about without thorough investigation. Though I do not have insight into code too much, I could see great benefits from not inventing the wheel too often.

Wow, many thanks, that's what i meant. Very good info. You know things that slipped my attention while i was looking for first infos to get started with OpenCine development. I'm also familiar with RawTherapee and Darktable, as i'm a little bit into photography (especially HDR). While i'm developing i try to get closer to their workflow and look. In my opinion they are really straightforward, but i'm open for other opinions too. Have to make a list of control elements which would be used for plugin GUI.

I heard about OpenFX before, but succesfully forgot about it. Have to look into it to not 'reinvent the wheel' for plugins and such. Really important thing for me is to have as much as possible processed by GPU. This would deliver generally great performance. Although SSE/SIMD optimized code can be also really quick. Fast previews would benefit workflow and better workflow is what the most people want. Another thing that comes to my mind at the moment is Superresolution: But it's more as note to myself, maybe useful in the later development.

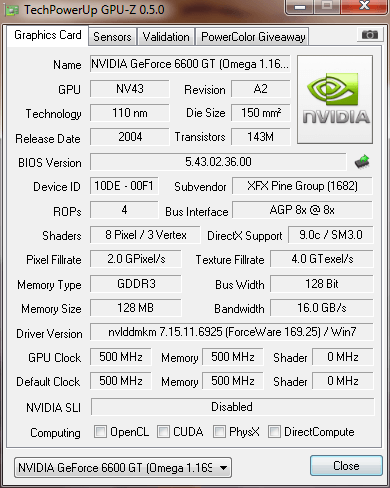

As I understand it the problem with GPU code is that CUDA and OpenCL are really two different things, performance-, feature-wise. There are many small things which makes it complicated to support wide range of computers, blender cycles renderer is suffering from that issue e.g. Also, Nvidia provides OpenCL version 1.0, AMD provides up to date version 1.1, under a restrictive license. Thus, compute shaders would come in handy: But why did Khronos introduce compute shaders in OpenGL when they already had OpenCL and its OpenGL interoperability API? Well, OpenCL (and CUDA) are aimed for heavyweight GPGPU projects and offer more features. Also, OpenCL can run on many different types of hardware (apart from GPUs), which makes the API thick and complicated compared to light compute shaders. Finally, the explicit synchronization between OpenGL and OpenCL/CUDA is troublesome to do without crudely blocking (some of the required extensions are not even supported yet).

With compute shaders, however, OpenGL is aware of all the dependencies and can schedule things smarter. This aspect of overhead might, in the end, be the most significant benefit for graphics algorithms which often execute for less than a millisecond. Since last summer, compute shaders, part of OpenGL 4.3, seem to be fully supported by NVIDIA and AMD.

Even mesa and thus linux floss drivers are into to supporting it, but it's not there. Nice thing is that OpenCL also runs on CPUs. Thus no extra fiddling if the computer has no proper graphics card/drivers. This guy, Syoyo Fujita, did an experimental raw decoder/player for magiclantern raw files. He is very into OpenGL compute shader, GLSL, very good source: 10-years of open source developer, quite open to talk with, but won't share his raw-realtime-playback code as far as I can tell. Is a floss CUDA jpeg2000 encoder I am aware of.

Creates slow-motion videos via opticalFlow on GPU 'GPU implementation of feature point tracking with and without simultaneous gain estimation', available as libV3D. Superresolution sounds nice, I found quite a few projects on github. (python) he made phd dissertation about it This one seems nice, too (c). This is one of the pits which i don't want to fall in: missing portability. For example Darktable (i like this software very much) is missing a Windows version (i tried to build it, but had not much time so i gave up for the moment), which led met to overthink the way of OpenCine from the beginning. And this is also the point why i'm trying to use OpenGL 3.3 (3.0 atm as my laptop has only Intel HD4000 3rd gen), to reach more people. I could aim at 4.2 with direct access features (my desktop uses GTX660), but not many people have up to date hardware.

What do you think, how should the roadmap be for such things? I wouldn't go below OpenGL 3 as you have sometimes to make clear cut. To be honest, i hadn't tested OpenCine for some time on Windows.

But my previous playback version ran pretty well, was looking exactly the same as on Linux. I will update it again when OpenGL playback is working. At the moment it is showing still DNG image (LibRaw/dcraw), had problems at first to show something besides black rectangle. Also still trying to structure the software architecture, separate views and handling of data. Maybe you should be added to the project and extend Wiki with your infos and ideas. Just write to Sebastian Pichelhofer if you have interest.

Some of the projects are not really maintained anymore, i'm not saying outdated, cause algorithms which are implemented correctly are still used for years or decades in famous software packages. I also started to look into OpenFX API (not OpenFX, Blender-like software), hopefully this thing is flexible enough to use the libs you suggested as i like to have all the tools inside OpenCine and not some command line tools (at least not visible). It's my personal opinion on that topic, but i like polished software GUI and workflow and not popping up command line windows which show cryptic output of some process. I think i will record a video or two and upload to YouTube, when i have something to show. Sorry fot the late reply, but it's pre-christmas time and as usual my daily work got more stressful because of it, so the progress slowed down at the moment. But nevertheless i'm still on it and if not coding, then thinking about structuring, architecture, writing some small prototypes to check my ideas etc.

As always, thanks, it's great collection of information. My latest progress included playing back raw Bayer data which i got from LibRaw (CPU usage about 2%-4% on Dell Latitude E6530, 1920x1080). What isn't implemented now, and what will be tested by me as soon as i have some free time, is OpenCL demosacing. I got OpenCL working under Linux and have to look into it more thoroughly. Also OpenFX/TuttleOFX is on the radar.

But OC isn't there at the moment, besides missing UI generation for plugins or processing pipeline, i'm still not done with structuring basic architecture to separate views and processing, also cleanup of code is missing to get fresh start on new features. I commited some really ugly parts of source code. Edit: Trying to NOT reinvent the wheel, but many things on that topic are not always portable or maintained. With 'reinventing the wheel' i meant that i prefer to use existing libs if available and also possible to compile without much hassle on Win/Linux. On the other hand fewer dependencies means better portability as some projects tend to bring a lot of things with them which aren't necessary for OC. I tried OpenImageIO, but had trouble to get it build.

This happened also with some other libs as well. Another task which has to be done in the course of OC development.

All these infos!!!have!!! To be moved to wiki, as they are a really good reference to look into. Can you do it?

What i haven't done at the moment is a proper roadmap. This would set some goals and make planning easier. ArrayFire and Movit look nice. For me It doesn't matter which library is used at the end, but the knowledge which one has to acquire is of importance. At the moment i'm trying to understand how to process Bayer pattern with 2x2 matrix and generate an array with 3 float values (RGB) for each pixel out of it. Also multi-threaded processing is an important thing. Later it can be easily ported, i (personally) have no problem with refactoring a lot of things.

DPX, MXF etc. Are also on the radar. That's why IDataProvider interface is there, but hasn't been completed yet. OC is limited to DNG folders at the moment (without subfolders), but the import will be extended by a dialog window which scans folders with-sub-folders and allows user to select items to be imported.

Afterwards one can manage clips: rename them, edit meta-data and so on. How this will be accomplished, e.g. By selecting required data ('DNG folder', 'MXF'.) or automatically, isn't elaborated at the moment. But as always: First things first.

No need to rush. I was successfully able to use the openCV de-bayering API previously for 8 bit and 16 bit images, using AHD and VNG as I recall. Let me know if that would be of use and I can dig up the code. I was working on performing the XYZ calibration transforms we were getting from Argyll and converting the final output to sRGB for image previews (for example on camera previews).Gabe On Mon, Dec 1, 2014 at 3:56 AM, Sebastian Pichelhofer wrote: Some material that might be interesting: source code of dcraw: source code of darktable: — Reply to this email directly or view it on GitHub. I have no actual plan about further libs atm, have to get some more progress to do some research about workflow.

You could write down you experience with ArrayFire and other libs, possible build problem, pits or even tricks. Or maybe think about possible workflows and how the user should accomplish things. For example starting with 'Manage' layout where user imports or manages different clips to 'Process' layout to get basic effects/grading applied and further to 'Export' which explains itself. Intuitive is the keyword. Edit: I haven't used video related software, like Premiere, for a long time. That's why i lack some knowledge about todays progress and needs on that topic. Will try to wrap my head around the libraries.

Hm, that's plenty of infos and really interesting. Have to look into that after work, but it will take much more time to analyze the info and get the roadmap done.

Yesterday i started porting Wiki over to Apertus. Just a note: I like some GUI elements of the tools you listed. Good to get some inspiration. But for OC we should think about some 'unique characteristic' (if possible) for UX/UI in the future. I know, not an easy task, cause there are so much of complete video processing software packages out there.

I learned about MOX some weeks ago and mentioned it to Sebastian then. We should definitely shouldn't lose sight of it.

And gather as much infos as possible to be prepared to implement import/export. By the way: Do you know some free OpenFX plug-ins? I need some to test the integration later, but i found only commercial ones. Simple ones would also fit for tests.

Maybe a dumb question and i should look into OpenFX or TuttleFX source folder. I would stick to 'Stack' first.

Let's see it as Darktable for video processing for now. Afterwards it can be changed and extended. I should be doing things more pragmatic, that would accelerate the whole progress, but it often ends in ugly/unmaintainable code. Maybe you, and other people too, get some interesting ideas or wishes about UI.

Not so good example of UI (for me at least): I forgot the name of the video software with nodes shown as circles, seems interesting, but irritates me just by looking at the screenshots. Edit: Googled it, and found that you mentioned it already - Autodesk Smoke. I like node-based interfaces as i think they are very flexible, but. I will try to stay away from them in OC as long as possible to keep things simple.:D Just a note: What is very important for such interfaces is grouping feature, where you group nodes and so get a new node with user-defined in- and outputs, like Blender does it. Otherwise it gets very messy and confusing.

But nevertheless your links are very interesting as always. I lose myself in numerous ideas for OC, have to write them down asap. I'm doing some sequence diagrams at the moment to focus on next tasks for clip import. But it's another thing which i would stash away for future at the moment.

In two weeks i have three weeks of holidays and will try to get progress rolling on and not just creeping slowly like now. Could you move your info from here to apertus Wiki, if you haven't started to do so? And one thing which we should consider, is discussing such things here: I have nothing against discussing here, but it will just polute the issue too much, which should stay focussed on ML and FFmpeg related stuff. A forum is better suited for our ideas on progress. Edit: Seems like nice tool to use for UI discussions: Edit2: Got another tool, as i don't like to spread my personal data everywhere on the internet, Pencil. Tried to use OpenImageIO as LibRaw alternative for simple DNG loading, but all i get is the thumbnail.

It's a pity that RawSpeed originally isn't packed into a libary. There was a problem to get it compiled in Windows. Update: Now i have finally wrapped my head a little bit around loading real raw data from LibRaw.

My prototype reads DNG file, moves the values according to 2x2 Bayer pattern into right position (R, G or B, unsigned short at the moment, thinking about float or at least half-float) and writes, thanks to OIIO, the data to TIFF file to get some preview. No processing done at the moment, at least not in the prototype. Next step is to get the playback working again in OC and then use the tests from the prototype to get more progress done. I already thought about a roadmap, but had no time while doing my daily work. Hm, i'll create a 'Features' page in the apertus° Wiki for it.

Edit: At the moment still working on things which are mostly relevant for OC development, to get more or less a solid foundation and then proceed to add features. Next step, after playback, is to get two buffers per frame, original and processed data, which results in further things to estimate, like RAM usage and what is an acceptable limit. It's an important step before doing simple processing. Also still thinking about how to use OpenFX/TuttleOFX and also OpenCL for parallel processing. Too many ideas atm which i have to prioritize.

Merry Christmas! I got the playback working again in the meantime. Not all things are really cleaned up at the moment (at least to my liking), e.g. UI elements still have default/not very descriptive names in code or chunks of code which are commented out and are obsolete, but that's what i will do while implementing further features. I will do another video to show current progress soon, but this time the link to the YT video will go to apertus° Wiki. And also the information from Github Wiki will be moved there step by step. Edit: And yes, i know that the code isn't beautiful in some parts at the moment.;) Edit 2: I also haven't used cppcheck that much lately, together with ugly code (at prototype level) it may result in memory leaks or similar atm.

Hi, it would help a lot if you would move the info to Wiki. Nothing really urgent at the moment, as i'm trying to get some tests related to processing done. Hope to get some results this weekend, because there is not much time on work days. One thing that i consider to do, is to switch to Windows for development. The reason is simple, the tools under Windows are better, e.g. CodeXL, NVIDIA Nsight, Visual Studio etc.

Linux is great too, but the driver for Intel HD cards doesn't have some things included which are present under Windows. It would also improve quality of the code and fix some bugs, which GCC doesn't recognize as such (there ist also the other case where VS doesn't complain, but GCC does). You could look into the issues, also other entries are in the lab at lab.apertus.org. Phabricator is very good as bug tracker as i can assign task to commit.

This helps to check the history and resolve bugs in the future. My hardware differs on lpatop and desktop PC. Laptop has Intel HD 4000 3rd Gen and desktop NVIDIA 660. While NVIDIA drivers good is the Intel driver or better to say Mesa not up to the level of Intel driver for Windows. GDebugger is no more and CodeXL as successor still does not run on pure Intel HD graphics card, but no problem to run it on NVIDIA. I'm using external HDD with Linux partition for development, by the way, so i can switch between different machines easily and usually the Linux loads the right driver at startup.

I'm already using bleeding edge repository for some time and updates are coming, but it's better than the driver from the default repo which gave me graphics glitches for some Qt/OpenGL examples. You are right, i'm working every day with VS, but that's not the main point for the switch as i'm comfortable with QtCreator also. Every tool has advantages or disadvantages, depends on the task. It's more for checking CMake scripts and library requirements under Windows, e.g. There is an issue with hardcoded paths.

I'm just going to alternate between Win and Linux (don't want to think about MaxOSX for now, but there are possibilities.) to verify portability and to fix bugs which differ between compilers. There are such tools like Visual Leak Detector or HeapInspector which are really helpful for tracing memory leaks and VS is also full of debug different tools. LinuxMint 17.1 is my current distro for development. Edit: Checked newest CodeXL, still does not work under Linux with Intel HD: 'OpenGL module not found'.